Visual Attention

How do we perceive the world around us? A seemingly simple question that gets devilishly hard the deeper one dives into it. It’s really mindblowing how much work our central nervous system does (not just in the brain but also in the eye itself!) to turn those photons into dogs, cats, trees, and loved ones.

My work in this area focuses on a few fundamental questions in this domain.

1) What is the time course of visual processing and attention?

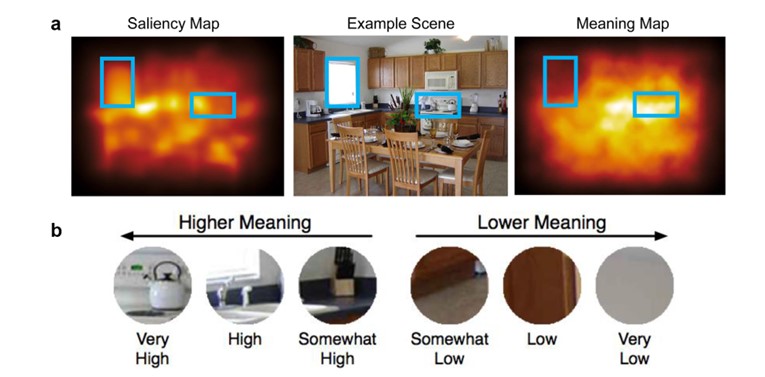

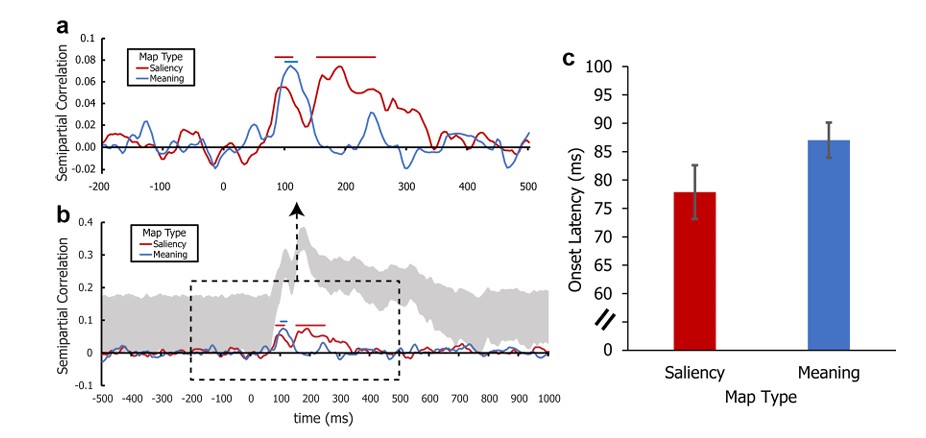

Everything we see is made up of visual features. These range from simple things like lines and colors to more complicated things like textures, contours and so on. One of the things I’m interested in understanding is how quickly our brains can begin to process these features in real-world scenes. For example, in one of my papers, I looked at the difference between how long it took for the brain to process simple visual features (i.e., Saliency) in real-world scenes versus more complicated ones that were more closely related to understanding what things “are” (i.e., “Meaning” or semantic informativeness).

To do this, we recorded electrical activity from the brain while subjects looked at real-world scenes and used modeling techniques to figure out at what point in time information predictive of each type of feature began to emerge in the neural signal. It turned out to be a pretty close race! Saliency-related information emerged about 78 milliseconds after viewing a scene, with meaning-related information emerging a mere 9 milliseconds later.

Kiat, J.E., Hayes, T.R., Henderson, J.M., Luck, S.J. (2022). Rapid extraction of the spatial distribution of physical saliency and semantic informativeness from natural scenes in the human brain. Journal of Neuroscience, 97-108

2) How can we best model how the brain represents complex visual information?

Speaking of complex visual features. Another interesting question is how our brains represent complex real-world scenes. Naturally, there have been hundreds, if not thousands, of studies looking at visual memory. However the vast majority of studies in this area focus on arrays of simple objects (e.g. circles, squares etc.) as opposed to complex real-world scenes. Now this research is certainly valuable and super important, but it would be really cool to also work on understanding how our brains store the type of visual information all of us process in almost every moment of our waking lives.

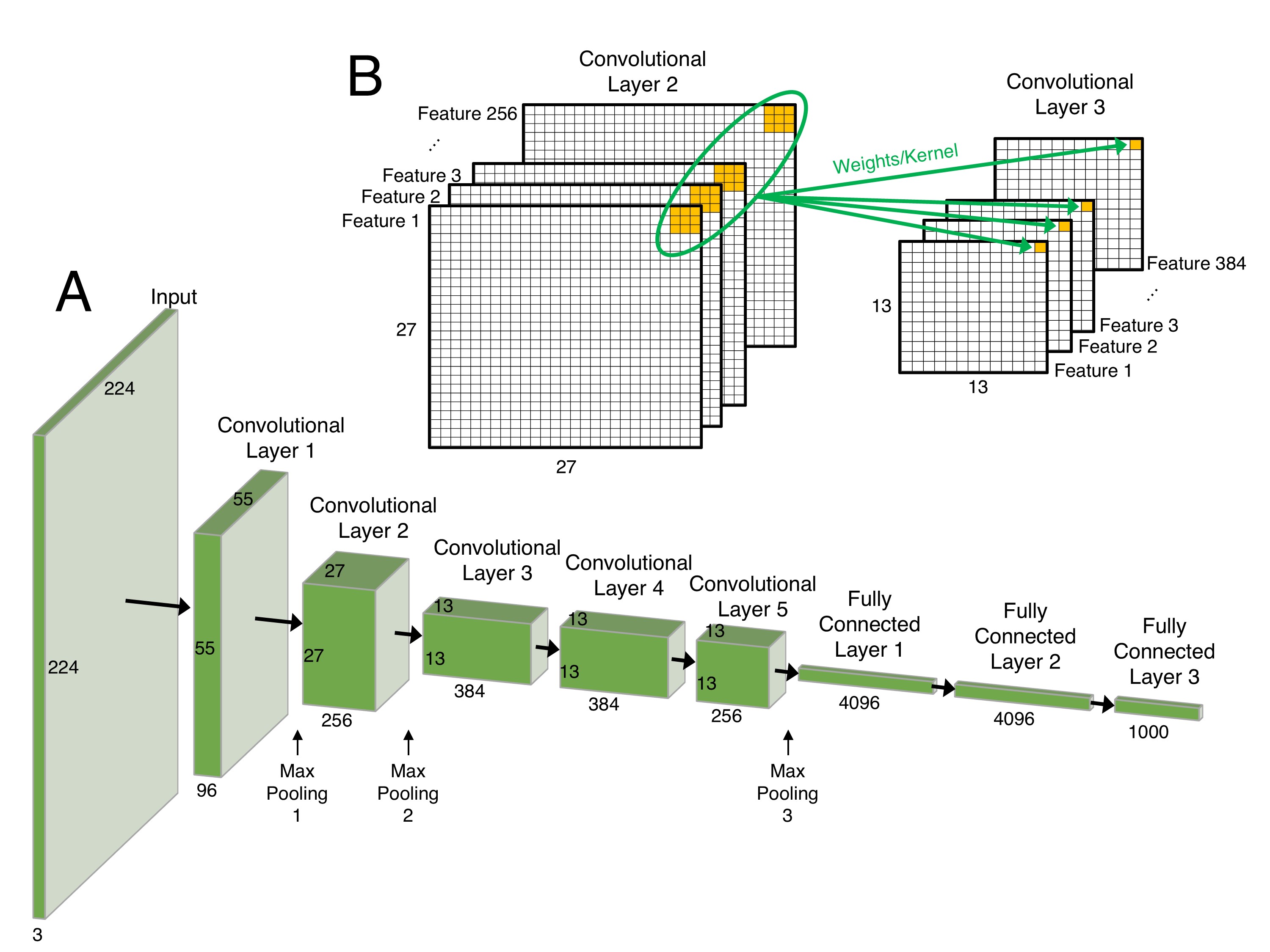

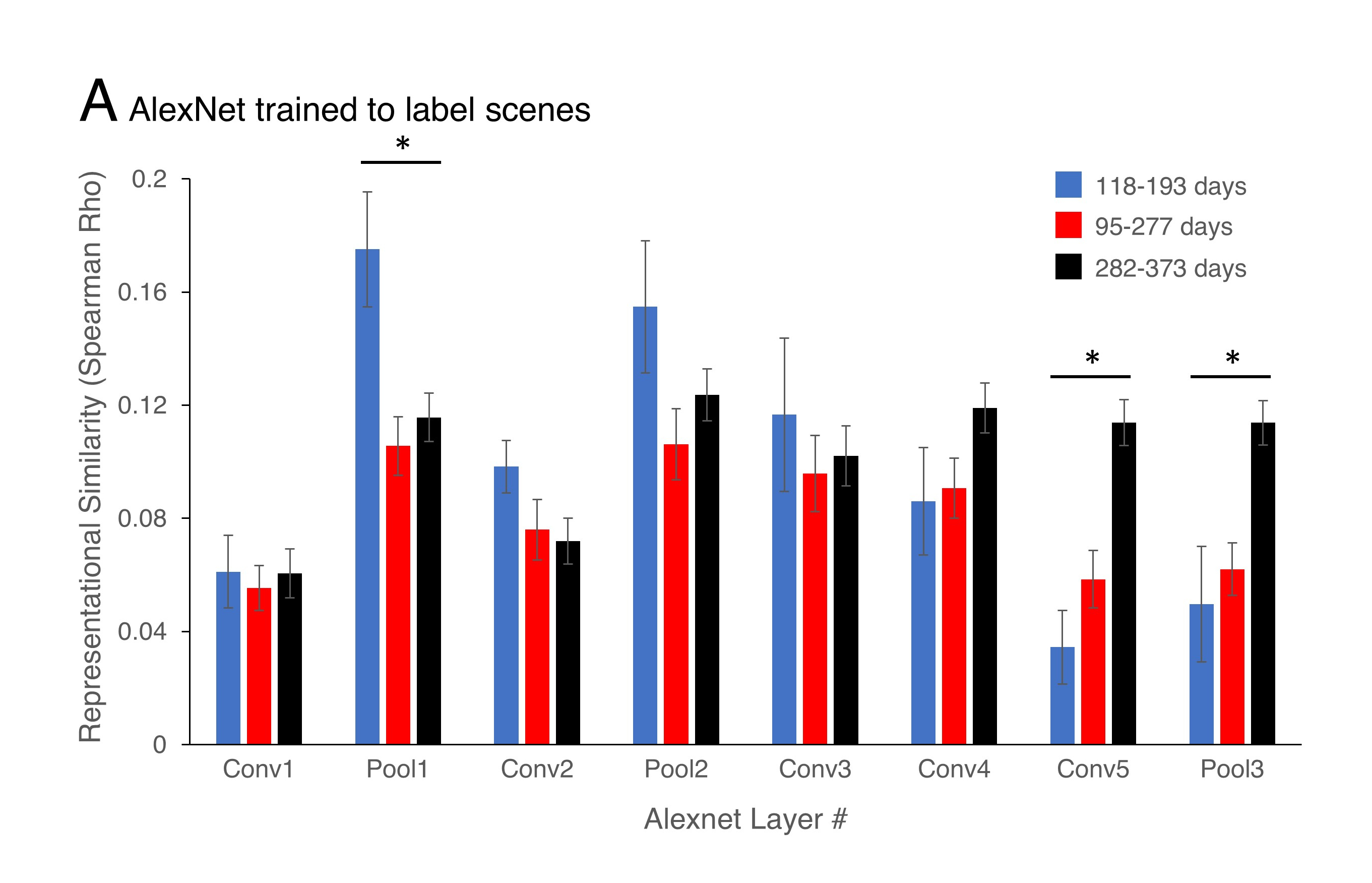

To achieve this, Steve and I have been working on using the activation outputs from neural network models (computer programs designed to recognize patterns in data and combine that information to “understand” things) to capture the gradient of abstraction (i.e., the progression of visual features from simple lines to complex combinations of texture and contours) in real-world visual input.

We hope to have a full paper on this approach out soon involving neural data, but for now, we’ve shown that this approach works pretty well with regard to understanding what drives the visual attention of younger and older infants which is a promising start!

Kiat, J.E., Luck, S.J., Beckner, A.G., Hayes, T.R., Pomaranski, K.I., Henderson, J.M., Oakes, L.M. (2022). Linking Patterns of Infant Eye Movements to a Neural Network Model of the Ventral Stream Using Representational Similarity Analysis. Developmental Science.

3) How are 1 & 2 impacted by individual traits and state-level differences?

Last but not least in a currently active line of research. I’ve been working on understanding how individual differences in specific traits and states change the way our brains process and represent visual features and information. This has proven to be an extremely exciting line of work, so I’m looking forward to sharing more in this space once the papers are out!